When Google first set BERT loose on its search engine a year ago, they said it would affect one in 10 search results. That means roughly 120 billion search engine results pages (SERPs) were reshuffled due to BERT in the last 12 months.

Despite the effect on so many search results, Google originally said there was nothing SEOs needed to do to optimize for BERT.

In case you missed it, the Bidirectional Encoder Representations from Transformers (mercifully, BERT for short) is an open-source technique for natural language processing that Google released in 2018. Google applied BERT to Search queries in 2019. In simple terms, BERT helps Google Search better understand the way humans communicate.

Since its release, Google’s messaging about BERT has been more nuanced, revealing three ways it affects the outcome of search results. That’s why, in this post, we’ll show you how BERT is changing the results for certain types of queries and what you can do to get, or keep, your content in good standing on SERPs.

BERT prefers natural writing over keyword placement

In a January 2020 Google Webmaster Hangout, Google’s Webmaster Trends Analyst John Mueller was asked how to optimize content for BERT. His response made it clear that BERT put another (final?) nail in the coffin of keyword stuffing.

“Our recommendation there is essentially to write naturally,” Mueller said. “Kind of like a normal human would be able to understand. So instead of stuffing keywords as much as possible, kind of write naturally.”

How does BERT reduce the need for keyword placement?

BERT reads content bi-directionally, helping Google better understand the intent of an entire search query while relying less on individual keywords.

The key is the “B” in BERT, which stands for “bidirectional.” It means that, with BERT’s help, Google can read a query both left to right and right to left. It’s one of the biggest advancements in how Google understands queries.

Before BERT, Google read queries in one direction or the other. An example from Google is the sentence “I accessed the bank account.”

Without BERT’s bidirectional reading, Google would contextualize “bank” based on either what comes before the word (“I accessed the”) or what comes after (“account”). With BERT, Google understands “bank” based on “I accessed the” and “account.” So, Google knows that “bank” is about financial institutions and not the side of a river.

In complex queries, bidirectional reading produces search results that better match intent and rely less on keyword placement.

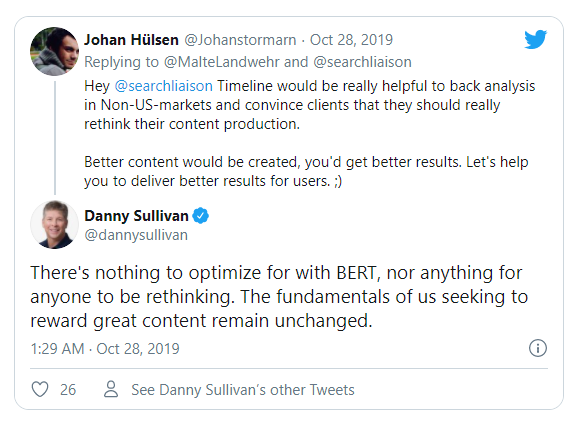

Here’s an example search query from Google to illustrate how it works.

Pre-BERT, Google provided results that included the keywords “estheticians” and “stand” prominently even though the content didn’t really match the query’s intent. With BERT, it’s a different story. Google returned content that directly answers what the searcher asked. More importantly, notice that the top result doesn’t include the keywords prominently (in the title or opening paragraph). BERT has helped Google match intent over keywords.

Takeaway: Write content for humans, not as scaffolding for keywords

BERT can sniff out query intent without matching keywords. For content creators, that means no more need to awkwardly cram keywords, especially long-tail ones, into your copy. Instead, focus on writing content that’s easy for a human to read and understand. If you do that, BERT will help Google understand the meaning of your content and land you higher up on SERPs.

Here are some tips on writing naturally, so BERT better understands the meaning of your content:

- Write for your intended audience: Consider their tone, style, and level of sophistication, so your content matches how they ask questions.

- Keep your content concise: Since keyword density matters less, you can get the point across without worrying about how many times you’ve added specific words.

- Double-check for good grammar and simplicity: If your writing would confuse a human, it’ll trip up BERT.

BERT understands the intent of long-tail keywords

In another Google Webmaster Hangout in May 2020, Mueller confirmed that BERT’s biggest strength is looking for relevant results for long-tail keywords.

“When it comes to pages themselves, we try to figure out what are those pages actually about and how do those pages map to those specific queries that we’ve got,” Mueller said. “In particular, when these are long queries where we need to understand what is the context here, what is something that people are actually searching for within this query.”

How does BERT understand the intent of long-tail keywords?

BERT uses something called “attention scores,” along with bi-directional reading, to better understand what web pages are about and how they map to long-tail keywords.

Here’s how it works. BERT compares every word in a sentence with every other word. The result of that comparison is an attention score.

An attention score reveals how much influence every other word in the sentence should have on the word it’s reviewing. That context helps a machine understand the intended meanings of ambiguous words.

Google explains this with an example. Say you gave BERT these two sentences:

“The animal didn’t cross the street because it was too tired” and “The animal didn’t cross the street because it was too wide.”

Attention scores tell BERT that in the first sentence, “animal” is more important in the representation of “it.” While in the second sentence, “street” is more important in the representation of “it.”

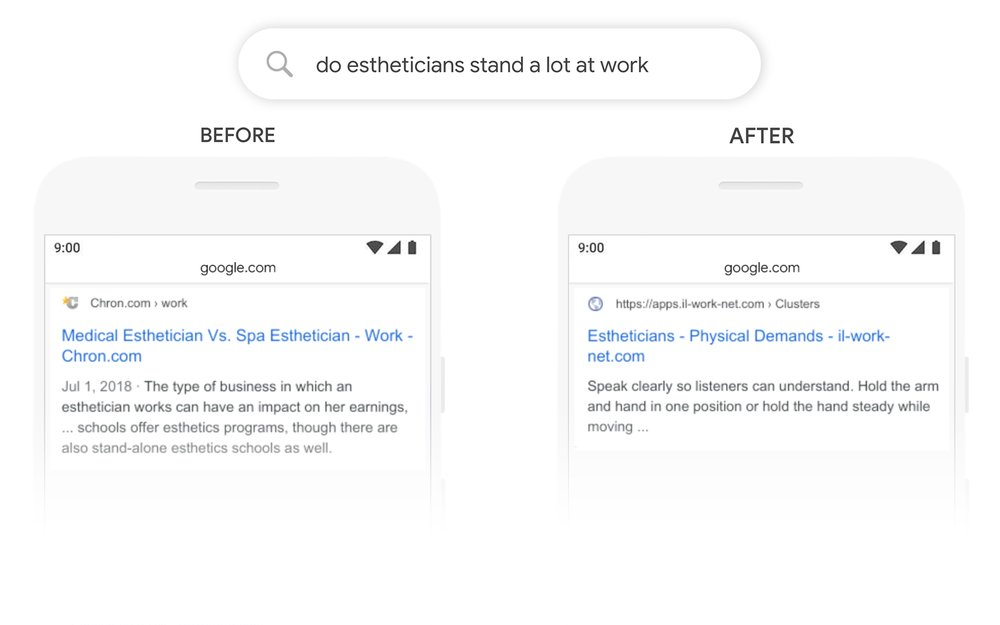

When Google tested this ability on a search query, something interesting happened. BERT understood the importance of words Google would have previously ignored.

Originally, Google ignored the word “to.” But once BERT was implemented, Google understood that “to” changed the whole meaning of the query. BERT helps Google find more relevant matches to complicated, long-tail keywords.

Takeaway: Create more specific, relevant content for long-tail search

BERT specifically affects the SERPs for long, complex search queries. We heard that from Mueller and see that in Google’s testing. So, optimizing for BERT means focusing on your long-tail strategy.

To keep or improve your ranking for long-tail keywords, you’ll need content that very directly answers the intent of those niche queries. BERT will overlook your content if it’s keyword-rich but not specifically relevant.

Here are a few tips for writing specifically, so BERT chooses to surface your content on SERPs:

- Give each page a tight focus: BERT rewards pages that specifically answer search questions.

- Keep subheads clear and on track: Each of your H2s should directly answer your title and, in turn, the keyword you’ve targeted.

- Have one idea per paragraph: BERT is used to source Featured Snippets, which require a paragraph to answer just one question.

There’s an added bonus when you create content for BERT. Since voice searches are more conversational and include long-tail keywords, optimizing for BERT will also help you optimize for voice.

BERT exposes inaccurate content

Google wants to deliver results that are reliable as well as relevant. Google’s VP of Search Pandu Nayak recently noted that they’re using BERT to expose factually incorrect content.

“We also just launched an update using our BERT language understanding models to improve the matching between news stories and available fact checks,” Nayak said.

How does BERT improve fact-check matching of search results?

BERT uses its ability to derive context from language to help Google better match articles with relevant fact-checking sources.

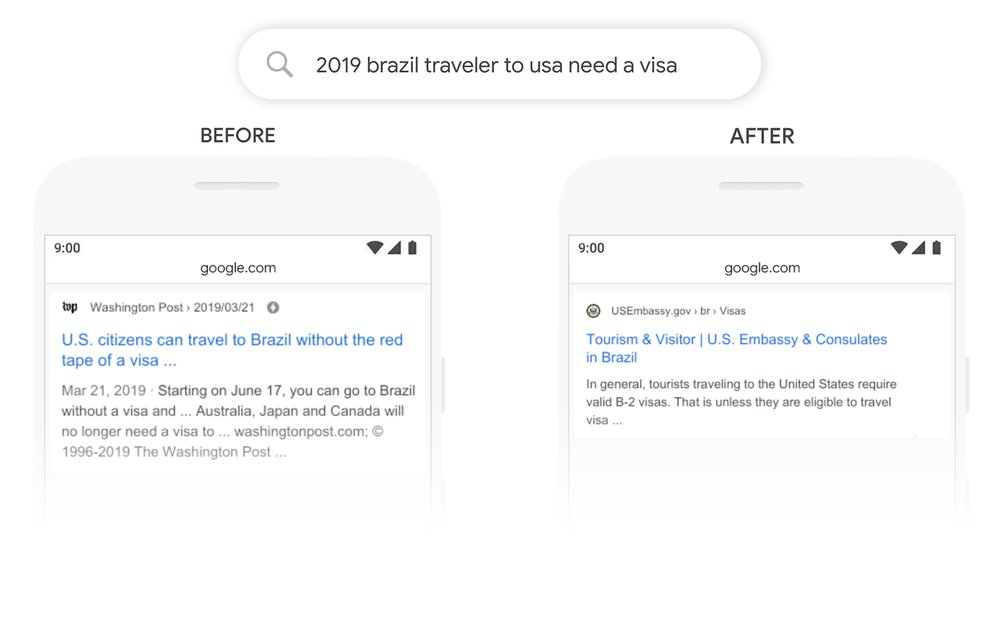

Fact checking is not new to Google. Google released the fact-check feature in 2016 and expanded it in 2017.

To be clear, Google does not actually check facts. Instead, Google matches relevant fact-checking articles created by publishers like Snopes and PolitiFact to content and queries.

BERT improves Google’s ability to correctly match fact-checking posts to content. It works in the same way BERT matches search query intent with relevant content.

According to Nayak’s comments, they’re only using BERT to match news stories with fact-checking content now. But Google is driven to deliver reliable results. And BERT continues to be applied to more features of Search like Images. So it’s a fair bet that BERT will be used to match all search results with available fact-checking content at some point.

Takeaway: Double down on fact checking all content

The number of fact-checking organizations continues to increase, and BERT now helps to better surface their content. Even if the results of fact checking don’t directly affect search results, you don’t want your article sitting next to a fact-check post showing why it’s inaccurate.

Here are a few resources to help you fact check content:

- A fact-checking checklist you can use for each piece of content you publish

- A list of fact-checking tools for your content creator toolbox

- Google’s Fact Check Explorer to see what kind of fact-checking content exists about your topic

Google BERT isn’t out to penalize your content

Past updates from Google have sought to penalize “black hat” SEO techniques. Mueller says that’s not what BERT is designed to do.

“So, it’s not necessarily the case that because Google understands the pages better we suddenly decided to kind of penalize a set of individual pages,” he explains. “Because we’re trying to understand these pages better, not try to understand what things people are doing wrong.”

Instead, he says that BERT is purely about helping searchers find better answers to their questions. Keep the searcher and their intended context in mind, and you’ll be well on your way to optimizing for BERT.